Implementation Walkthrough

Real-world deployment of containerized Node.js application on AWS ECS Fargate with automated CI/CD, infrastructure as code, and production-ready security practices.

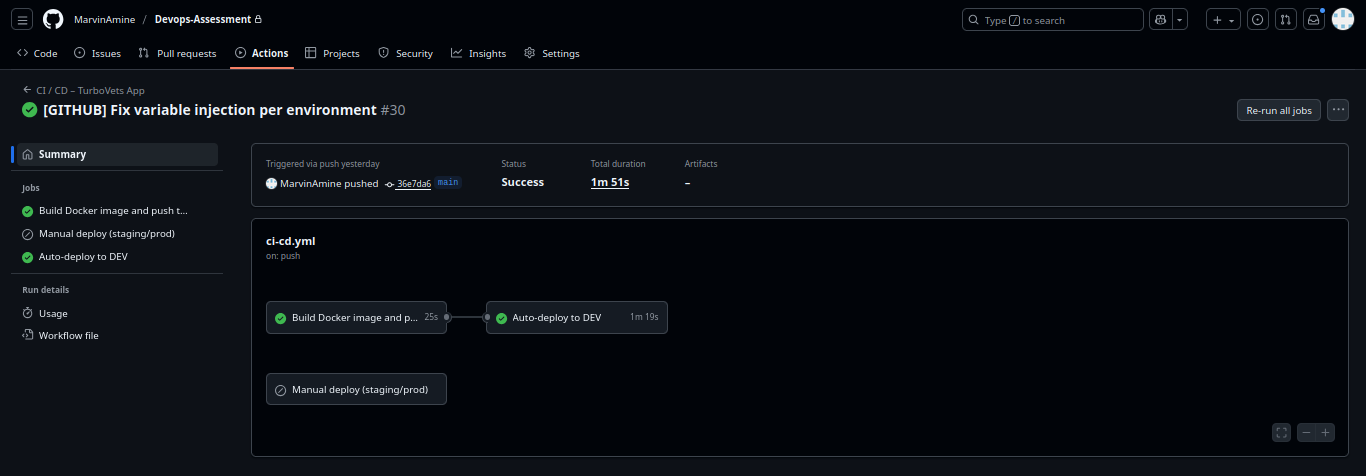

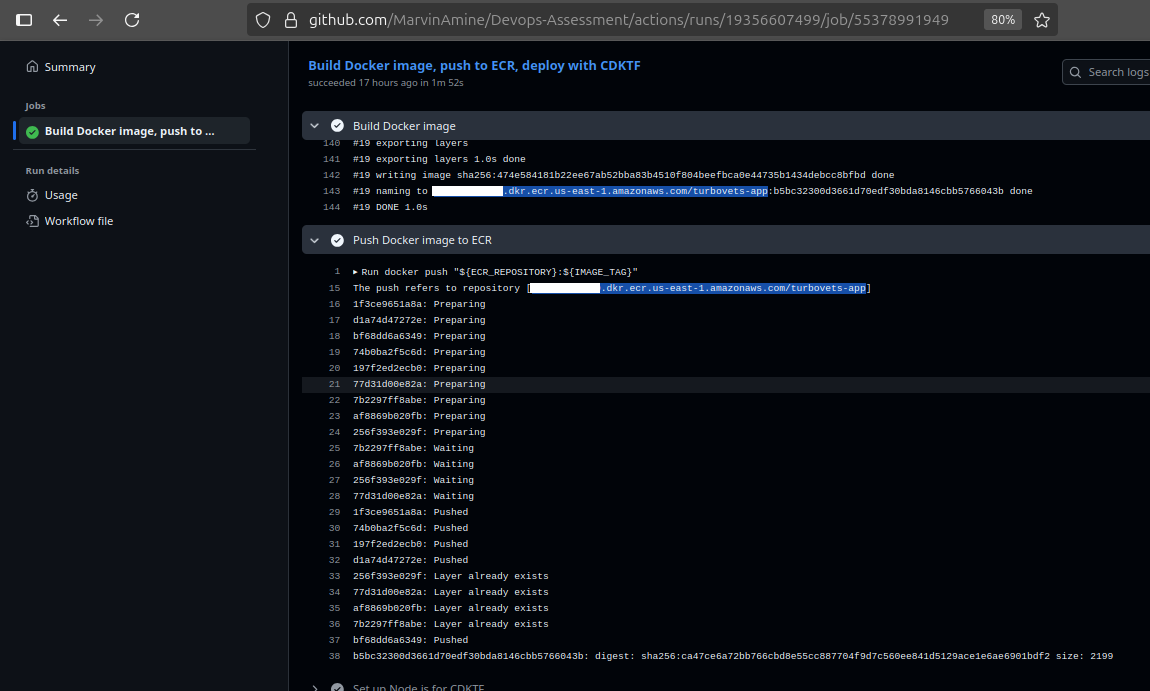

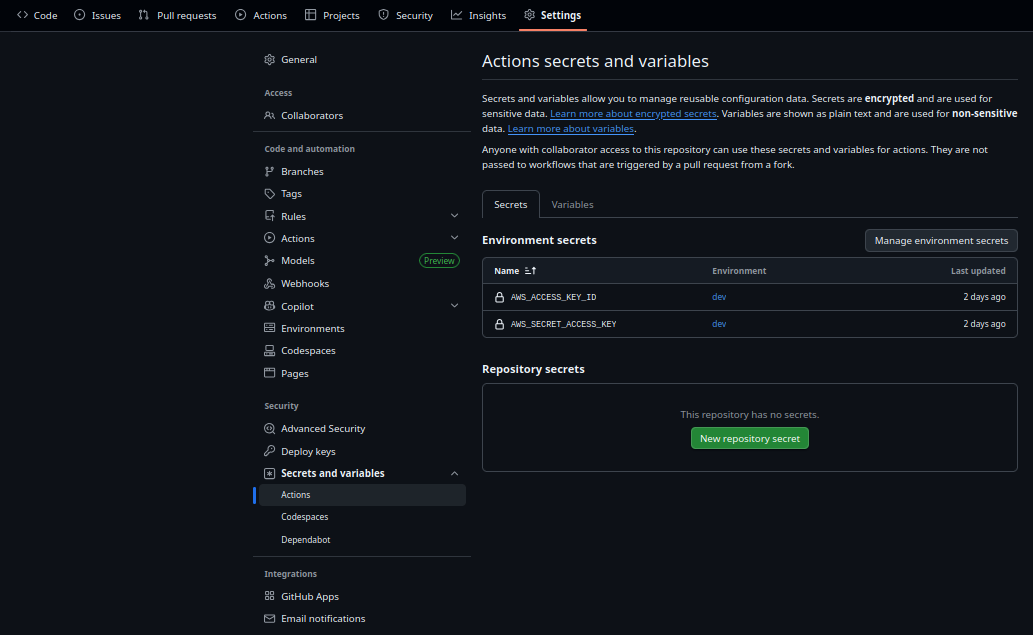

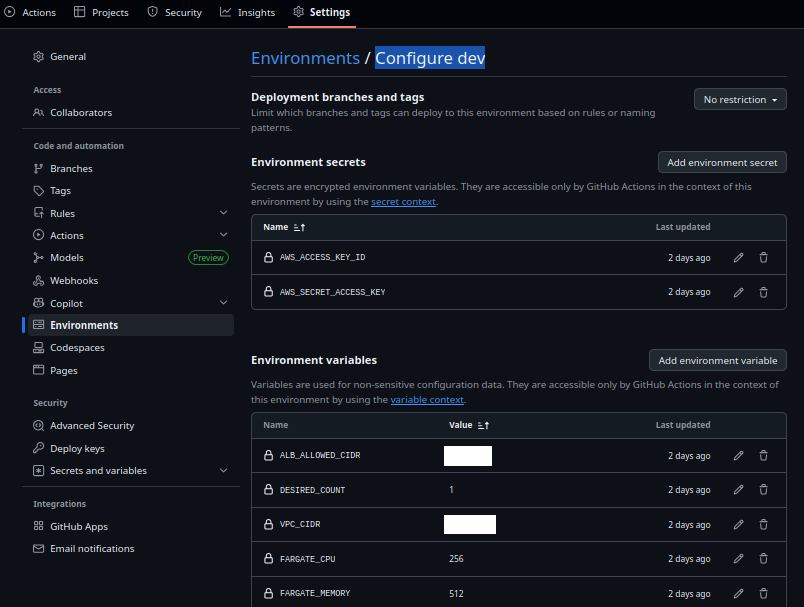

CI/CD Pipeline with GitHub Actions

Fully automated deployment pipeline that builds, tests, and deploys the application with environment-specific configuration and secrets management.

RepositoryNotEmptyException that would occur if Terraform tried to destroy

a non-empty ECR repository.

- CI creates repository on-demand if it doesn't exist

- Terraform state does not track ECR (decoupled lifecycle)

- Images remain safe during infrastructure updates/rebuilds

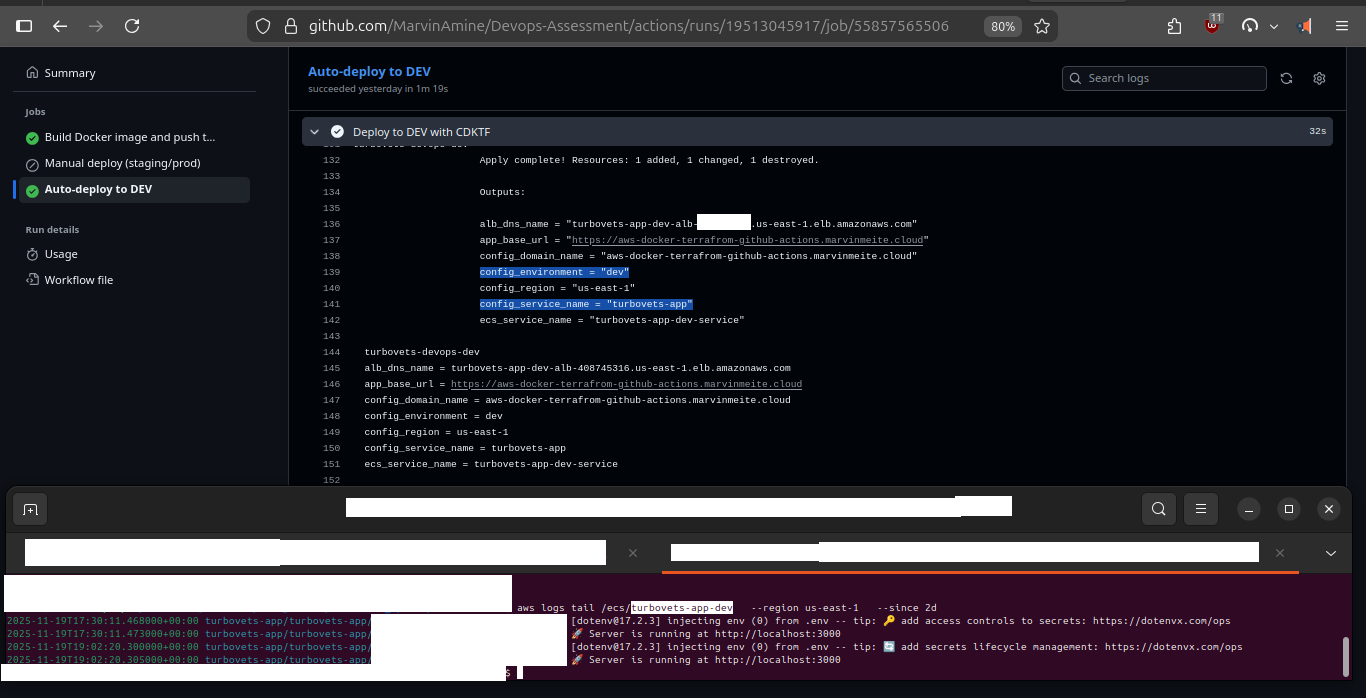

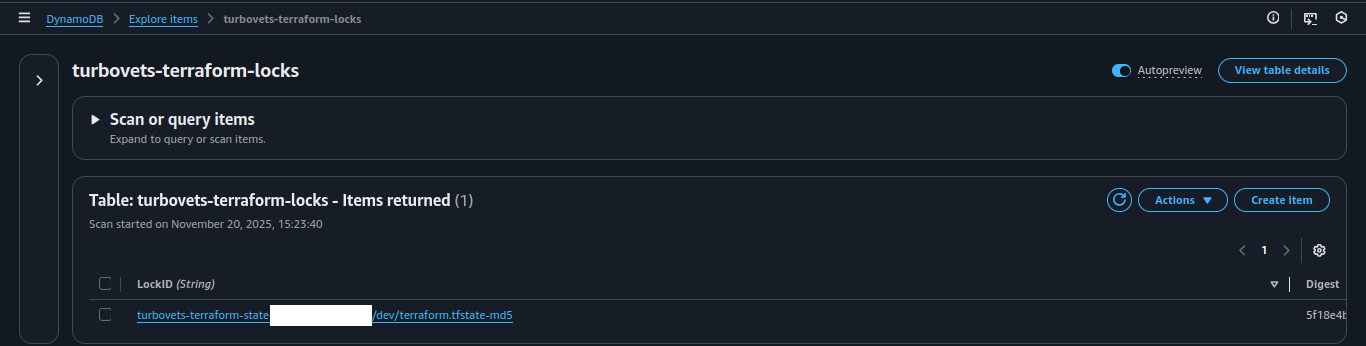

- S3 bucket: stores the

terraform.tfstatefiles per environment - DynamoDB table: provides state locking to prevent concurrent

cdktf deployruns from corrupting the state - Backend config: wired through GitHub repository variables (

TF_STATE_BUCKET,TF_LOCK_TABLE)

Complete variable injection during deployment showing all environment-specific configuration

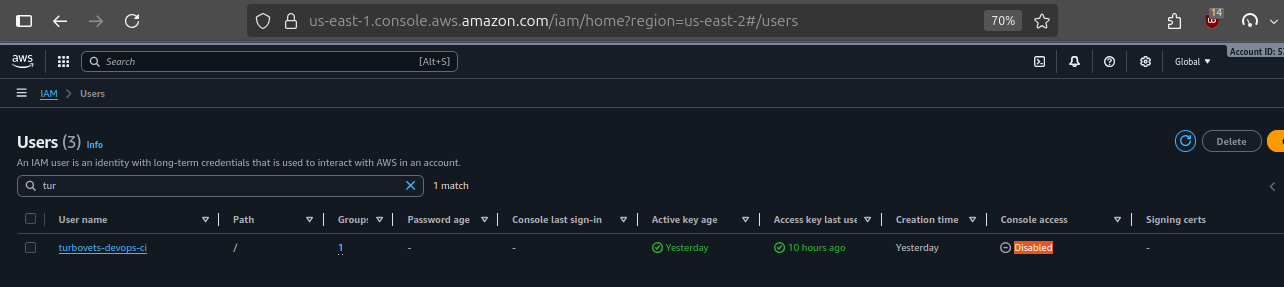

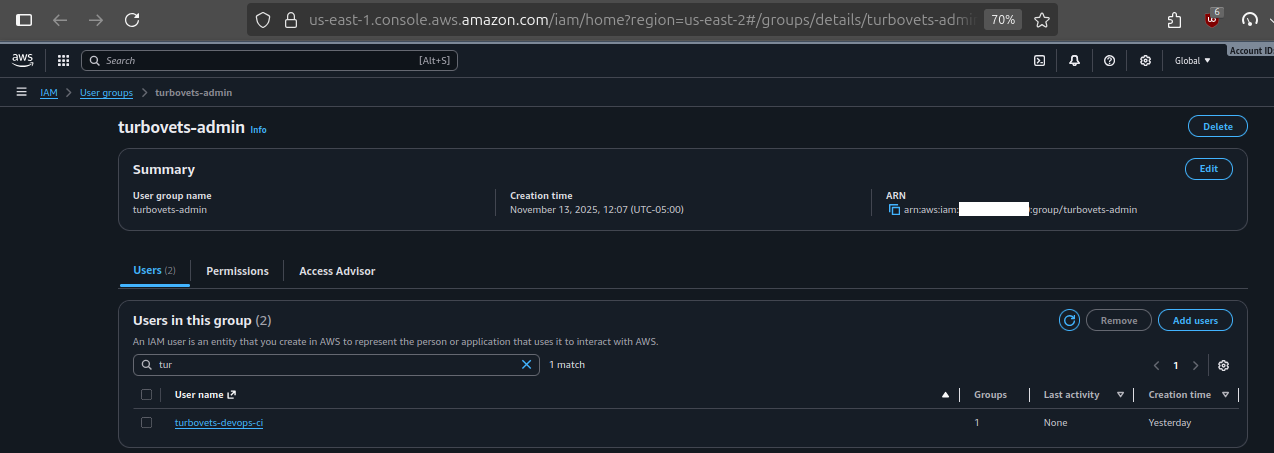

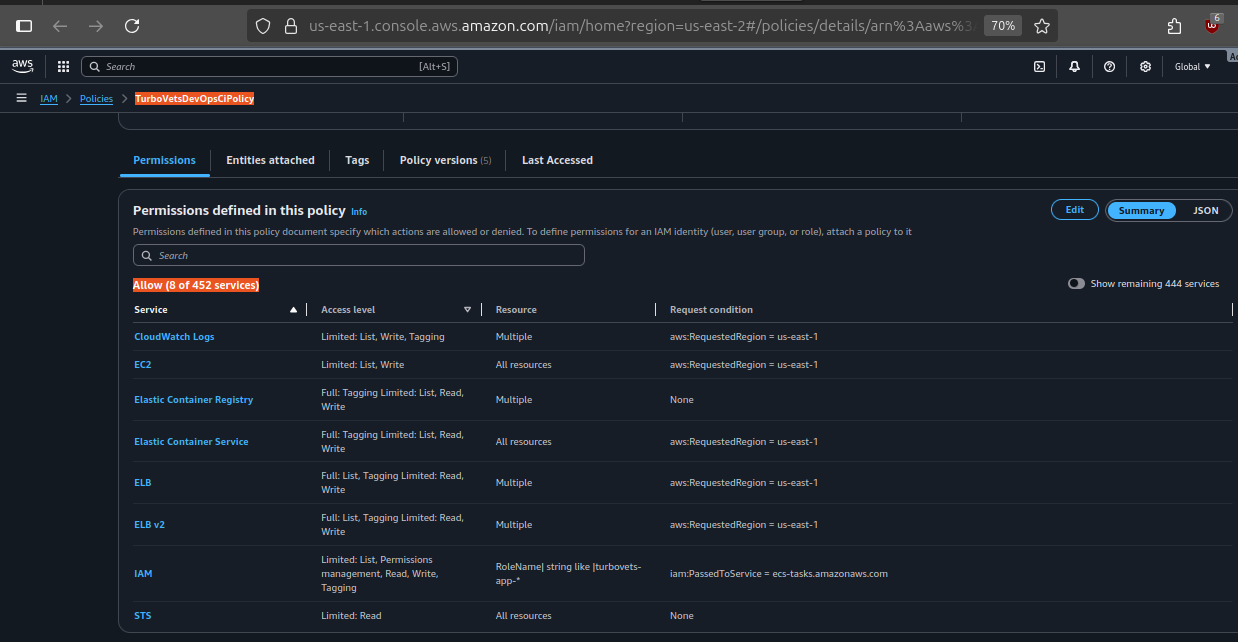

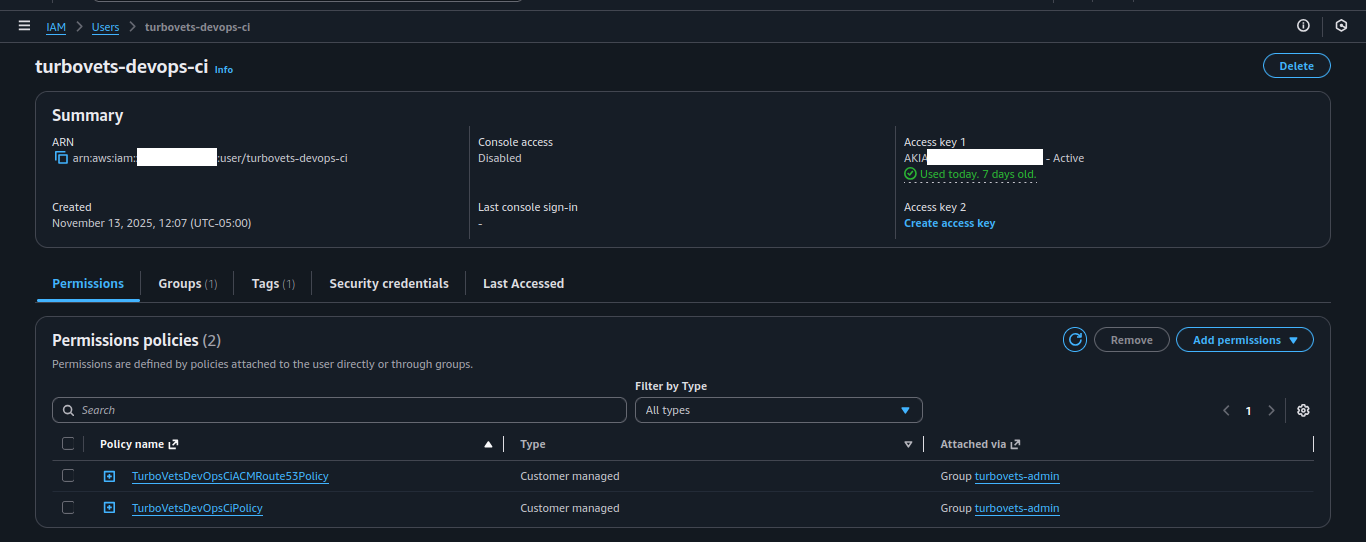

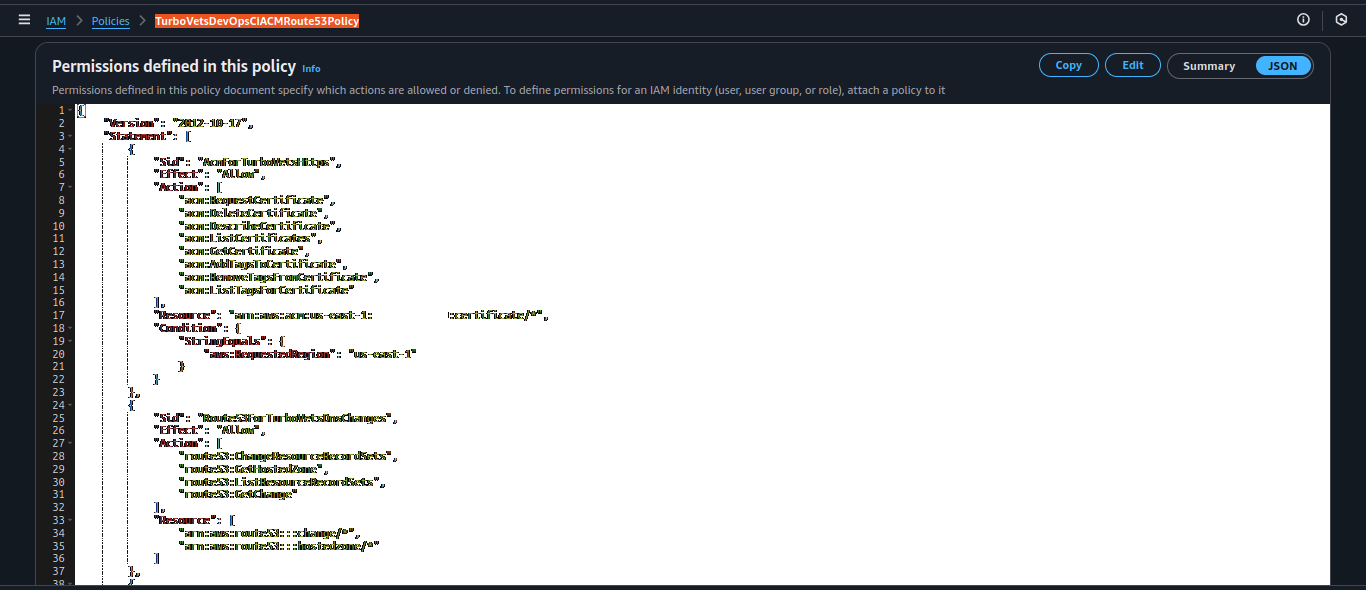

IAM Configuration & Security

Least-privilege IAM setup with dedicated CI/CD user, grouped permissions, and role-based access control for ECS tasks.

turbovets-github-actions-group

Full permission breakdown showing inherited group policies and dedicated Route53/ACM policy.

- Dedicated CI/CD user: Separate from human users for audit trail

- Group-based permissions: Easier to manage and update policies

- No inline policies: All permissions managed through groups and managed policies

- Access key rotation: Credentials can be rotated without code changes

- Task execution role: For ECS infrastructure operations (ECR pull, CloudWatch logs)

- Task application role: Empty by default, extended only when app needs AWS APIs

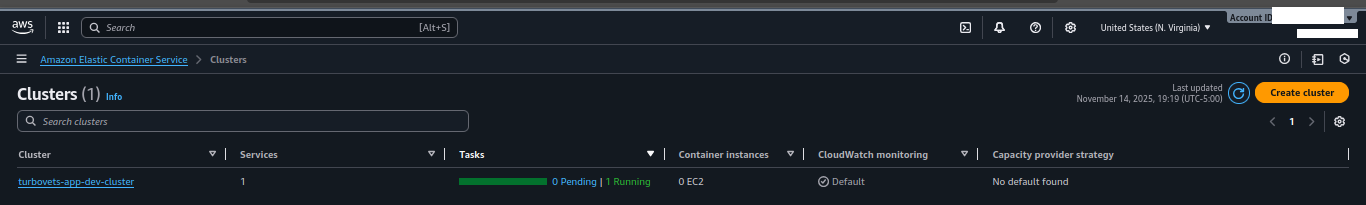

AWS Infrastructure Deployment

Multi-AZ VPC with ECS Fargate cluster, Application Load Balancer, and production-ready networking configuration deployed via CDKTF.

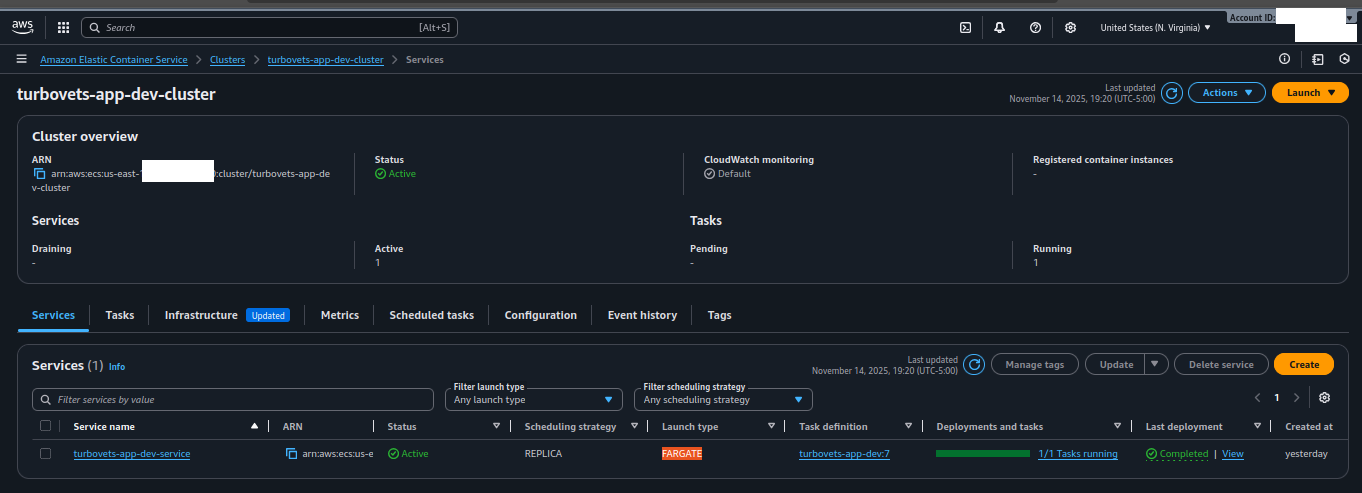

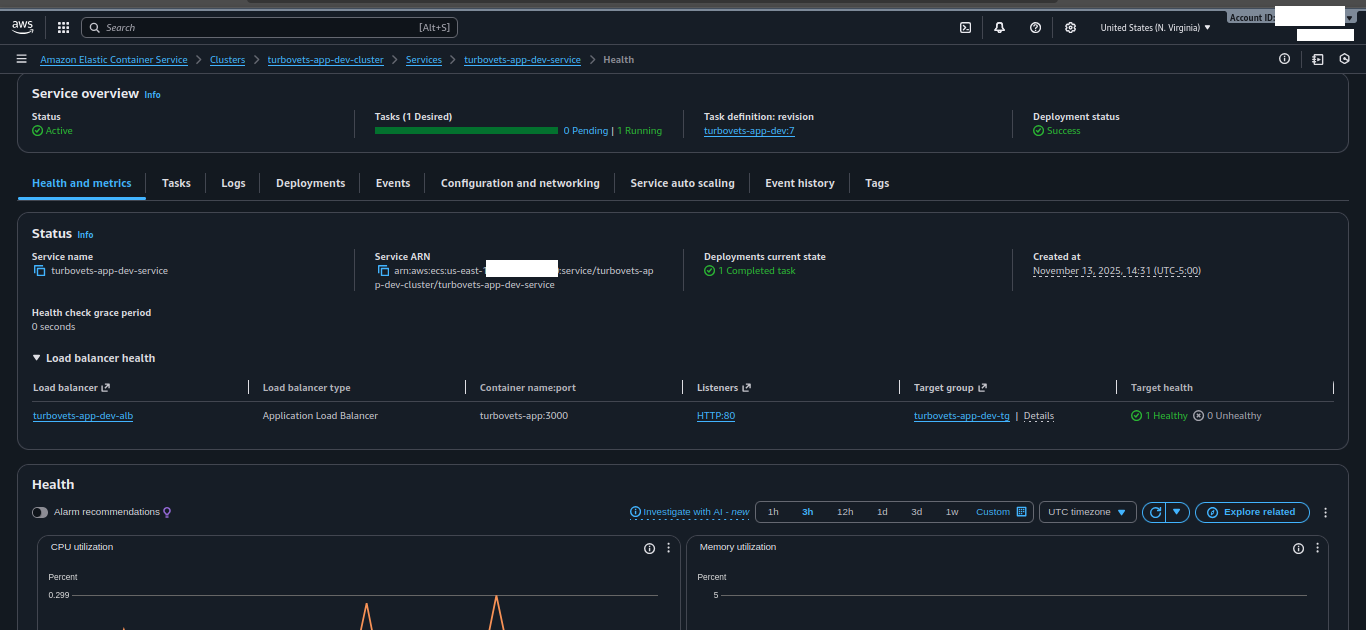

turbovets-app-dev-cluster with running service

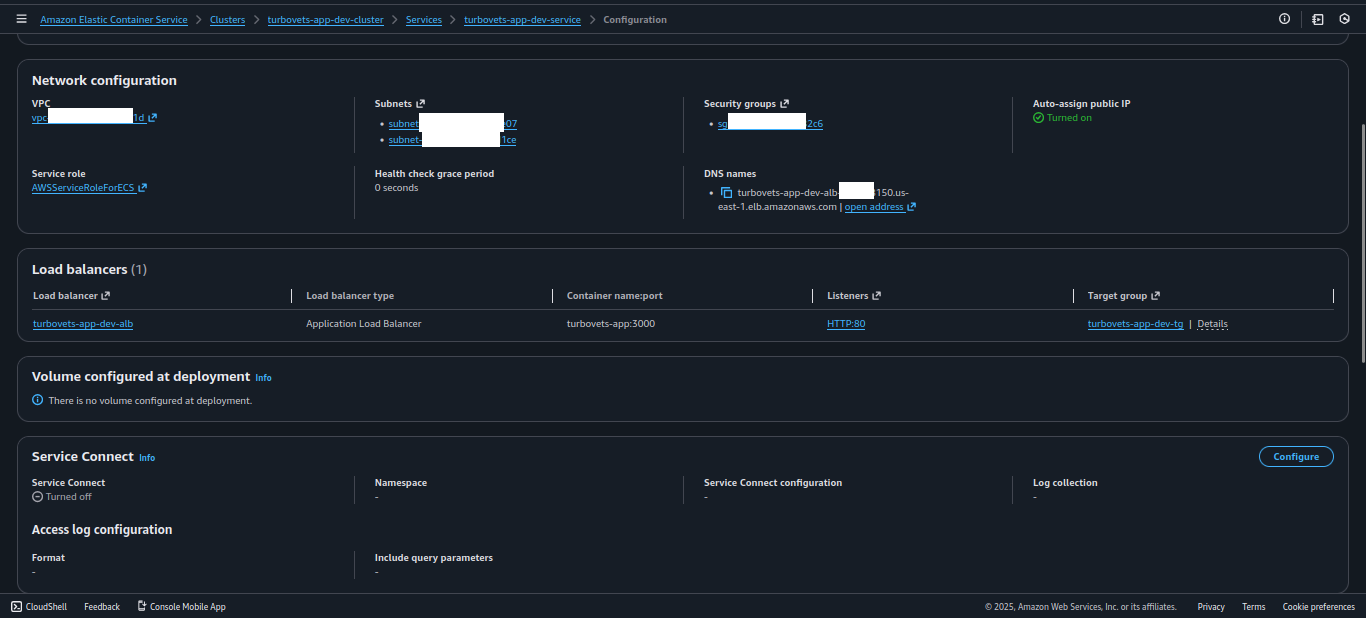

- All core resources (VPC, ALB, ECS, security groups) are provisioned via CDK for Terraform.

- Traffic enters through Route 53 → ALB (HTTPS) → ECS Fargate tasks.

- Observability via CloudWatch Logs for each ECS task family and environment.

- Networking and IAM are aligned with AWS Well-Architected best practices for small, production-grade workloads.